By Dan James.

Are we asking the right questions? Are we getting answers at the right time? Do our monitoring, evaluation and learning (MEL) systems help us with the important decisions? How should we present data to support decision-making?

Going beyond the narrow focus on M&E tools and methods, to think holistically about how organisations learn has been a longstanding part of INTRAC’s practice. For example, the final day of our advanced monitoring and evaluation course invites participants to assess their own organisations learning capabilities, drawing on work by Bruce Britton.

Like many organisations in the UK, Spring brings another planning cycle: reflecting on the current year and planning for the next. You may be wondering how an organisation like INTRAC monitors, evaluates and learns from its work to inform planning. Given that a large part of our business involves supporting others on planning, monitoring, evaluation and learning, we should be state of the art. Well, like the chef that eats baked beans on toast after their shift, we have not always given our own M&E the same attention that we give to others.

Like many organisations in the UK, Spring brings another planning cycle: reflecting on the current year and planning for the next. You may be wondering how an organisation like INTRAC monitors, evaluates and learns from its work to inform planning. Given that a large part of our business involves supporting others on planning, monitoring, evaluation and learning, we should be state of the art. Well, like the chef that eats baked beans on toast after their shift, we have not always given our own M&E the same attention that we give to others.

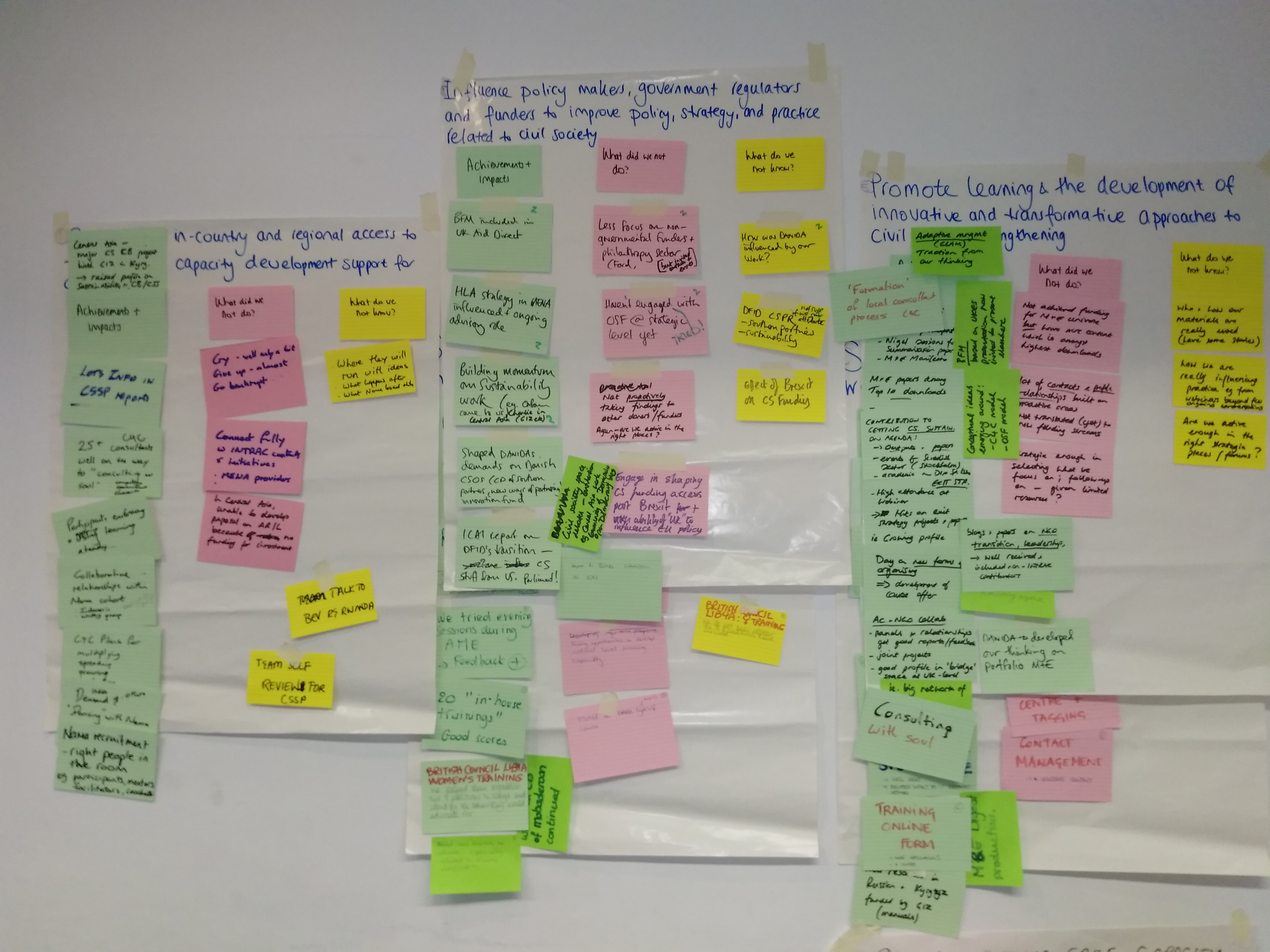

After a founder transition in 2015, INTRAC went through a strategic planning process to provide a framework and focus for the next five years. The process led us to a new strategy and required us to articulate our expected ‘contribution’ to strengthening civil society more clearly. The strategy provides an opportunity for refreshing our organisation-level M&E system, and over the last year we have tried to do just that. A planning session in February this year was the first chance to review the results and it threw up a number of challenges, both theoretical and practical.

Theoretical challenges

Like many organisations, we are finding that demonstrating our impact is challenging: we have a diverse portfolio of projects and activities, and are also quite far removed from the end beneficiaries of our work.

These are both issues we support other organisations to address. Drawing on Nigel Simister’s work on summarising portfolio change, we have largely opted for a ‘late-coding’ approach to assessing impact across our portfolio: allowing different parts of our work to generate different types of information, and then bringing these together to build a picture of impact. This avoids having to pre-define very specific indicators, but creates challenges in synthesising the results.

The distance – in terms of the chain of results – between our work and end beneficiaries makes it hard to assess our contribution, particularly for areas such as capacity change in individuals or organisations, or influencing policy. The long results chain also means that achievements are often not related to decisions or inputs during the current strategy period; they are the fruits of work in previous years.

Practical challenges

The process also threw up practical challenges, which will be familiar to all who have tried to implement MEL systems.

First, staff time is at a huge premium – time spent inputting data and reviewing past work is time that could otherwise be spent on delivering for our clients, or sharing and disseminating our work. Investing in MEL has a clear and immediate cost, and – as yet, in relation to the new strategy – unknown benefits. We need to work to simplify as far as possible our internal MEL processes and avoid duplication.

Second, it is easy to fall into the traps of organisational silos. Not all M&E tools fit all aspects of our work, so we end up with different ‘reports’ from different parts of the organisation. We need to work more at integrating these into an organisation-wide system.

In spite of these challenges, the process has moved us forward in terms of our learning, particularly in relating what we have done to what we said we would do under the new strategy. For example, influencing policy in relation to civil society is part of our strategy, but is not an area where we have significant direct funding. However, we identified a substantial number of potential achievements to follow-up and document more fully. For example, our work around civil society sustainability led to significant input to the Independent Commission for Aid Impact’s review of DFID’s approach to managing exit and transition; and M&E support we provided for a multi-country pilot of Beneficiary Feedback Mechanisms informed an emphasis on beneficiary feedback in DFID’s civil society partnership review. We also documented areas where we had made less progress than planned and were able to take those forward into planning for 2017.

In relation to the MEL process itself, after reviewing the information generated, we decided on a number of changes: to introduce some ‘early coding’ to help us better document how our work relates to different strategy areas, and also to continue to pilot in-depth review processes to capture learning from some of our larger and more long-term initiatives.

This process has not been straightforward. We have – as an organisation – ‘lived’ some of the challenges that our clients face in operationalising MEL systems, and navigated trade-offs and pragmatic challenges when our own work is at stake. All of this provides a useful reminder to really focus on measuring what matters.

This post was originally published as a viewpoint on INTRAC’s February M&E Digest.